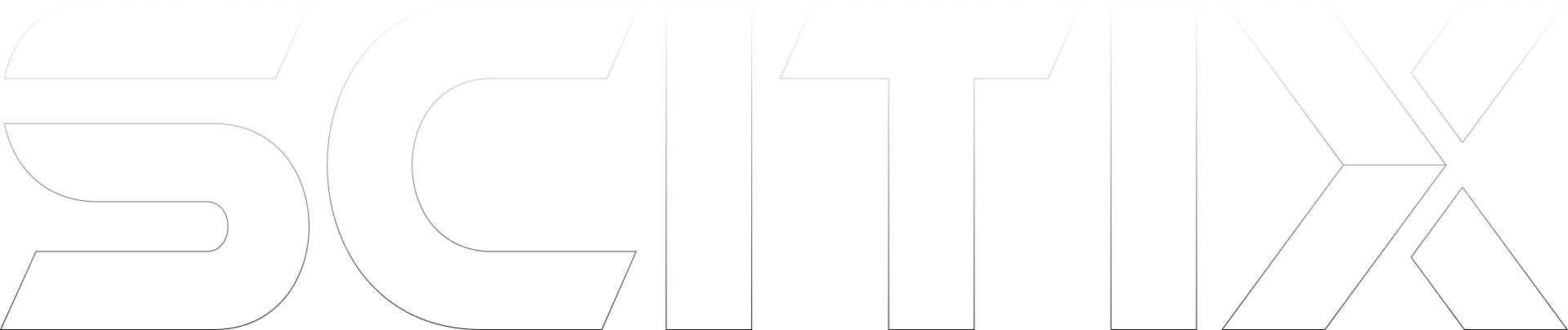

Inference

- Optimized GPU scheduling for real-time responses

- Support for streaming output & long-context inference

- Works with open-source & custom models

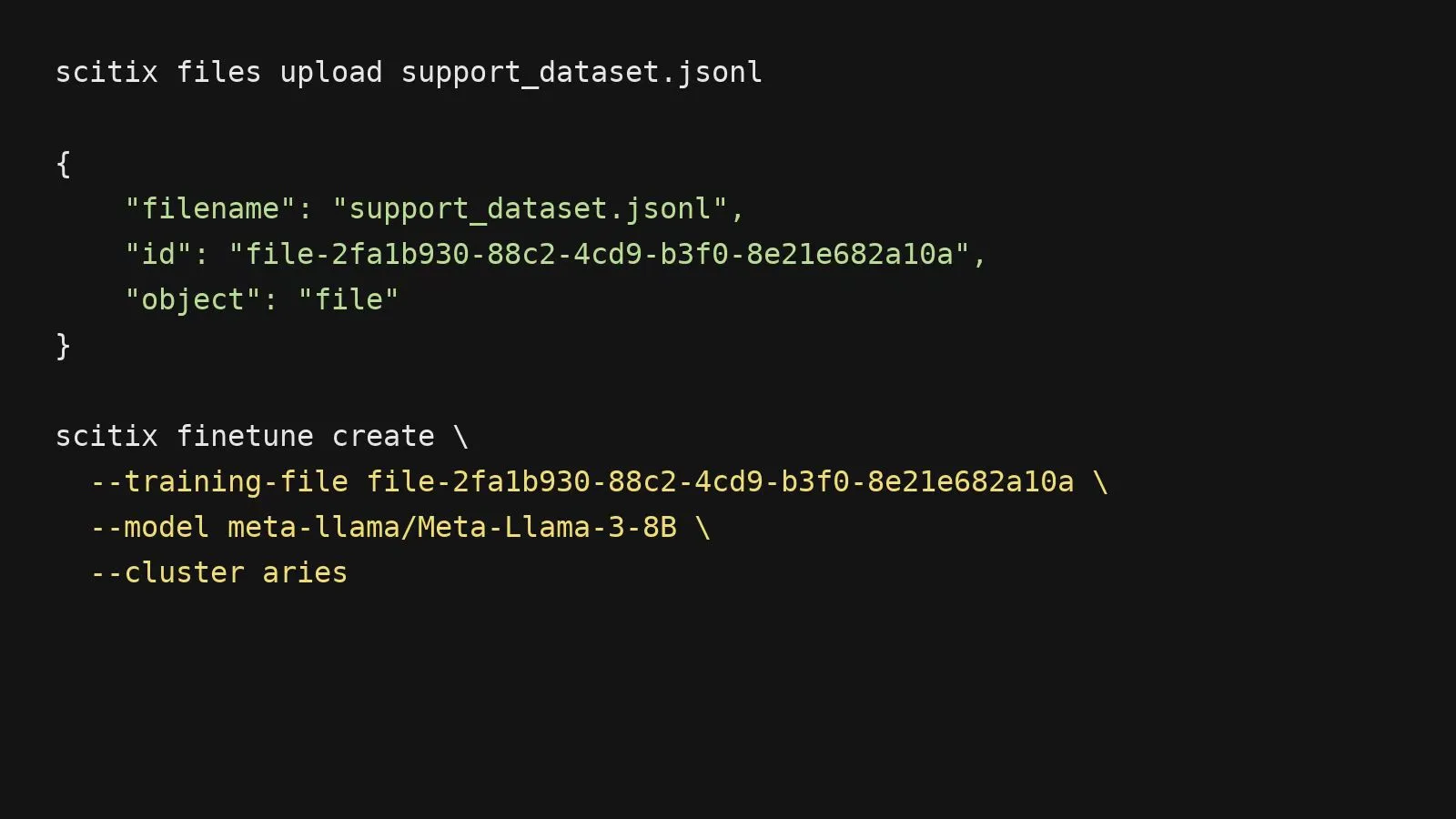

Fine-Tuning

- Full control of model parameters

- Support for LoRA & full fine-tuning

- Seamless integration with APIs

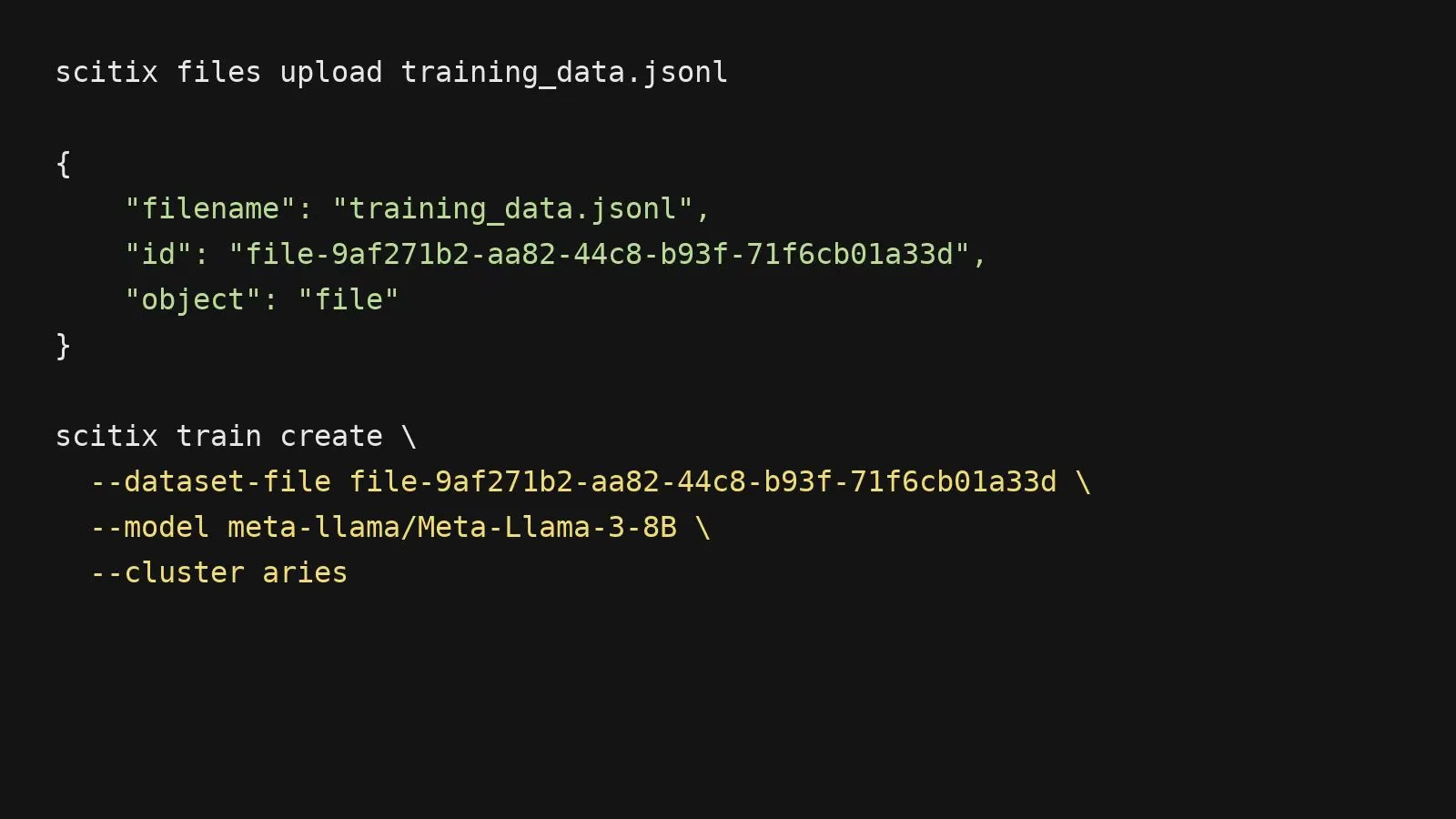

Training

- Multi-node distributed training & elastic scaling

- Fast data pipeline with on-cluster storage

- Support for PyTorch & Lightning

- Checkpointing & logs built-in

Stable

-

99.9% uptime SLA

-

Multi-region failover

-

Error rate <0.01% for mission

Effective

-

90%+ GPU utilization

-

Job startup latency <60s

-

30-40% lower cost

Intelligent

-

25% higher allocation efficiency

-

<1s monitoring latency

-

Predictive auto-scaling

Qwen3 is the latest generation of large language models in Qwen series, offering a comprehensive suite of dense and mixture-of-experts (MoE) models.

DeepSeek-V3.2 is a cutting-edge large language model developed by DeepSeek-AI, representing a significant advancement in open-source AI capabilities.

GPT-OSS-120B is OpenAI’s 120-billion-parameter open-source large language model designed to deliver high performance, strong reasoning ability, and full transparency for research and commercial use.

The gpt-oss-20b is an open-weight, 20-billion parameter Mixture-of-Experts (MoE) model released by OpenAI under the Apache 2.0 license, designed for powerful reasoning, strong instruction following, and agentic workflows including tool use.

The Google/Gemma-3-27b-it is a 27-billion-parameter model from Google's Gemma family, engineered for powerful multimodal understanding and generation. Built upon the same research and technology that powers the Gemini models, this instruction-tuned variant is designed for high performance and versatile deployment.

Kimi K2-Instruct-0905 is the latest, most capable version of Kimi K2. It is a state-of-the-art mixture-of-experts (MoE) language model, featuring 32 billion activated parameters and a total of 1 trillion parameters.

view more

Our Vision

ScitiX envisions a future where cities think, adapt, and grow like living

systems. Like a breathing interface, AI-powered infrastructure feels organic,

aware, and undeniably alive — shaping environments that respond to people, not the other way

around.

By harnessing open innovation and limitless compute, we're building the backbone for smarter

cities: sustainable, intelligent, and always evolving.

With ScitiX, every spark of AI creativity moves us closer to a world where technology and humanity thrive together.

Self-service

With self-service ordering and a comprehensive user guide to walk you through every step, the docs have you covered whether you're prototyping or scaling.

Open Source

Our infrastructure is built in the open - transparent, verifiable, and always

evolving with the community.

Explore our GitHub to see what we're building, contribute your ideas, or just star

the repo to stay connected.

Contact Us